Volumetric Fog renderer

This project was part of my school curriculum, but was specifically set-up for us to choose a

subject we'd like to work on. Since I was starting to get interested in graphics programming,

I wanted to do something in that field and chose to implement volumetric fog.

The repository was based off part of the BUAS engine Bee, which currently has NDA code (PS5 related), so I can't link it directly.

Graphics Programmer

Solo

8 weeks

Windows

Bee engine (made by BUAS)

OpenGL

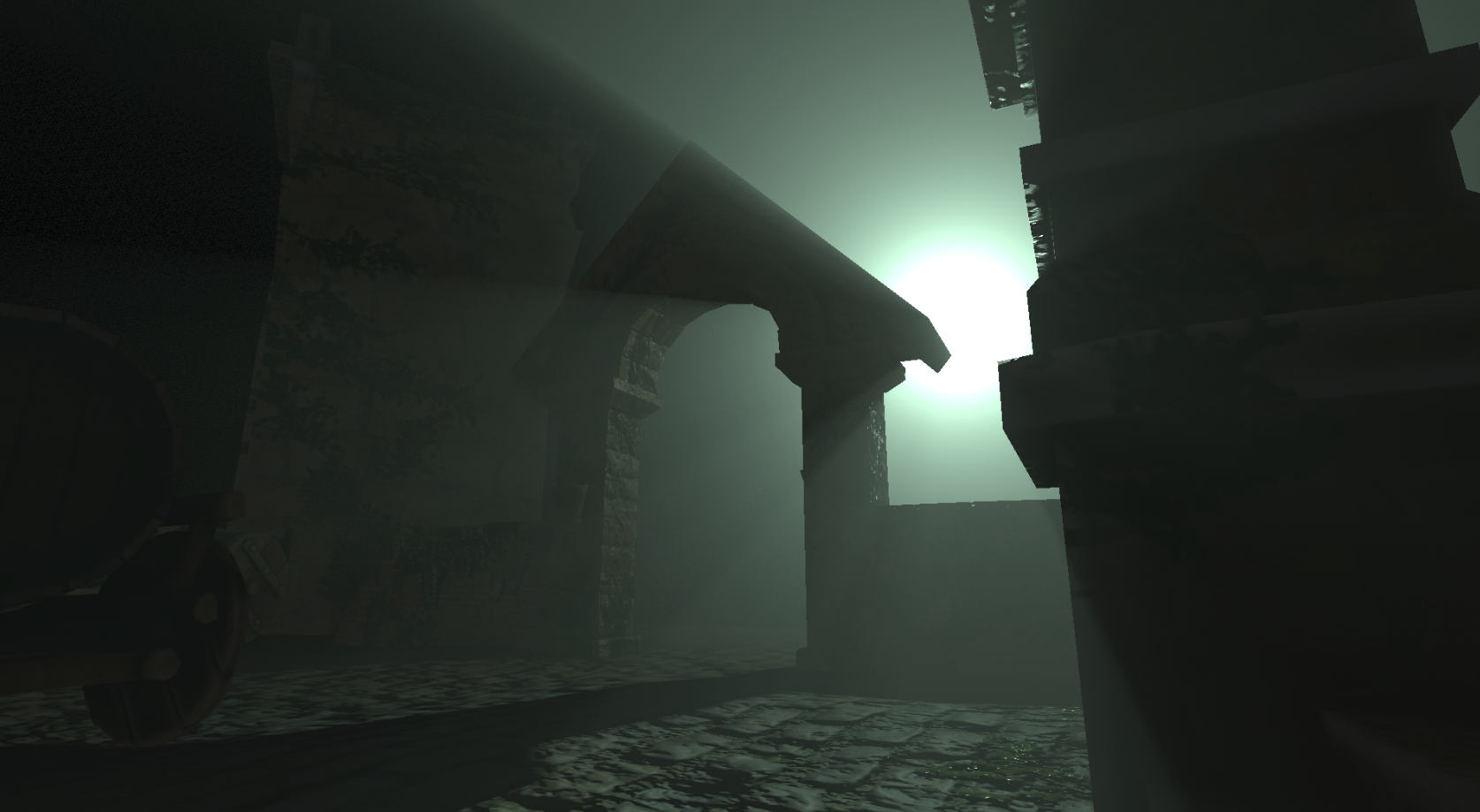

Uniform Fog Everywhere

I was completely new to the subject, so I started with researching different ways of implementing

volumetric fog in particular. Seeing the project's time limitations, I opted to implement a simpler

algorithm based on an older paper (Tóth, Umenhoffer / Real-time Volumetric Lighting in Participating Media, 2009).

For this project I had a little head start with Bee Engine (made by BUAS). This already featured a forward renderer written

with OpenGL. When implementing the fog according to the aforementioned paper, I read through the paper and 'translated' the formulas into

code in a full screen post processing compute pass. I also used Tomas Ohberg's implementation

(link to YouTube, link to GitLab)

to cross reference my code and outcomes, since it follows the same paper and the project showed my desirable outcomes.

This was also the part of the project I wrote my blog post about, which goes more in-depth into dissecting the

formulas I used and were presented in the paper, together with translating them into shader code:

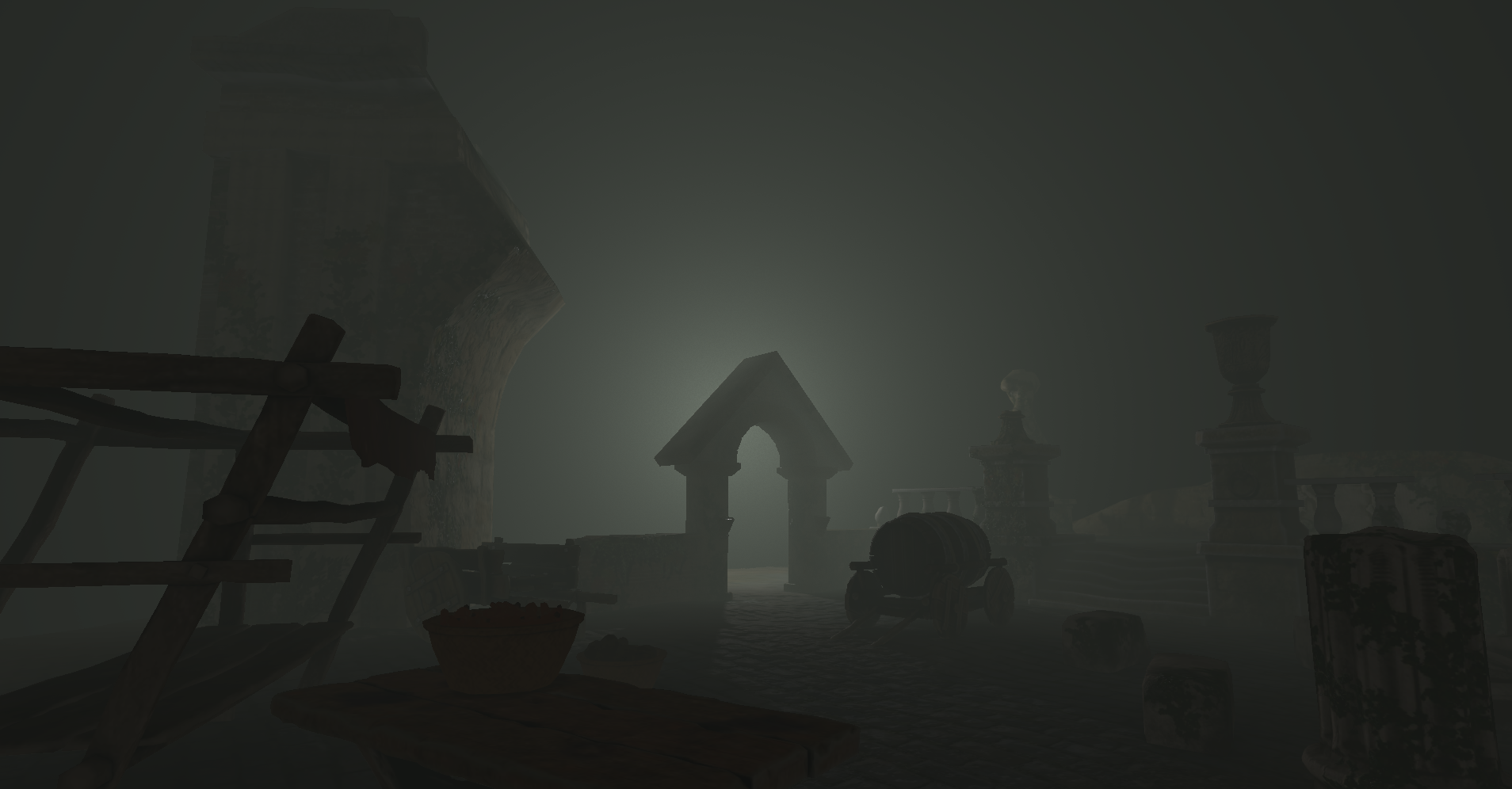

Non-Uniform Local Fog

For my project goal, I wanted to have localized fog volumes. For these localized fog volumes

I chose for AABBs as I found them fairly easy to work with and they would give room for possible

stretch goals in the future related to moving towards a voxel based volume renderer.

Instead of ray-marching over the whole camera->frag ray beginning to end, I do AABB-line intersections

per fog volume between the fog AABB and camera->frag line and only ray-march over the part of the

ray intersecting the AABB. This sometimes also saves me on ray-marches as (usually) not every

camera->frag ray has a fog AABB on its path.

Unfortunately, this approach has a logic error, which I discovered later into the project. When looking at

my local fog volumes from different angles, one can overlap the other in an unexpected way, since

I'm just looping over fog boxes and adding up their scattering and transmittance, without considering which volume the ray hits first.

By the end of the project I had different priorities, so I didn't manage to resolve this issue. In the future however, this could be resolved

either by sorting the fog volumes based on distance from the ray-marching point (a little sloppy, as this won't take into account nicely what happens

when volumes overlap each-other) or expand this implementation by moving towards a voxel based volume renderer.

As next step towards my project goal, I wanted the fog volumes to have non-uniform density. To accomplish this,

I sample density for the fog from 3D noise textures initialized at the start of the program utilizing the FastNoise2 library.

Since the volume doesn't have uniform density anymore, I wasn't able to calculate the density the light

travels through towards the march position in one go anymore. I had to write an additional ray-marching

pass from the march position towards the light position to more accurately accumulate density to calculate

how much light can reach the march position.

Profiling & Optimizing

Lastly, as my application is GPU bound, I used Nvidia Nsight Graphics to profile it and identify

the biggest bottlenecks to tackle with optimizations. This was my first time utilizing Nvidia Nsight Graphics

more thoroughly and often, which taught me how to analyze captured data and choose which optimization to implement.

The first major bottleneck was the 3D noise calculations. Before opting for 3D Textures, I implemented shader generated noise

in a less than optimal way. After identifying this bottleneck and after doing a little research, I swapped to using 3D Textures.

I quickly however noticed that the 3D Textures weren't quite optimal either. When reading and comparing data gathered from frame captures with and without noise,

I noticed the texture fetches from the 3D textures made the biggest difference. To optimize this, I did several things:

Lowered noise texture's resolution from 128 to 64 to reduce bandwidth, Lowered the precision of the 3D texture's internal

format from 32F to 16F to reduce bandwidth, Generate mipmaps for 3D texture and sample Lod level 4 to reduce bandwidth.

TODO: Paragraph about downscaling and bilateral upsampling.