Introduction

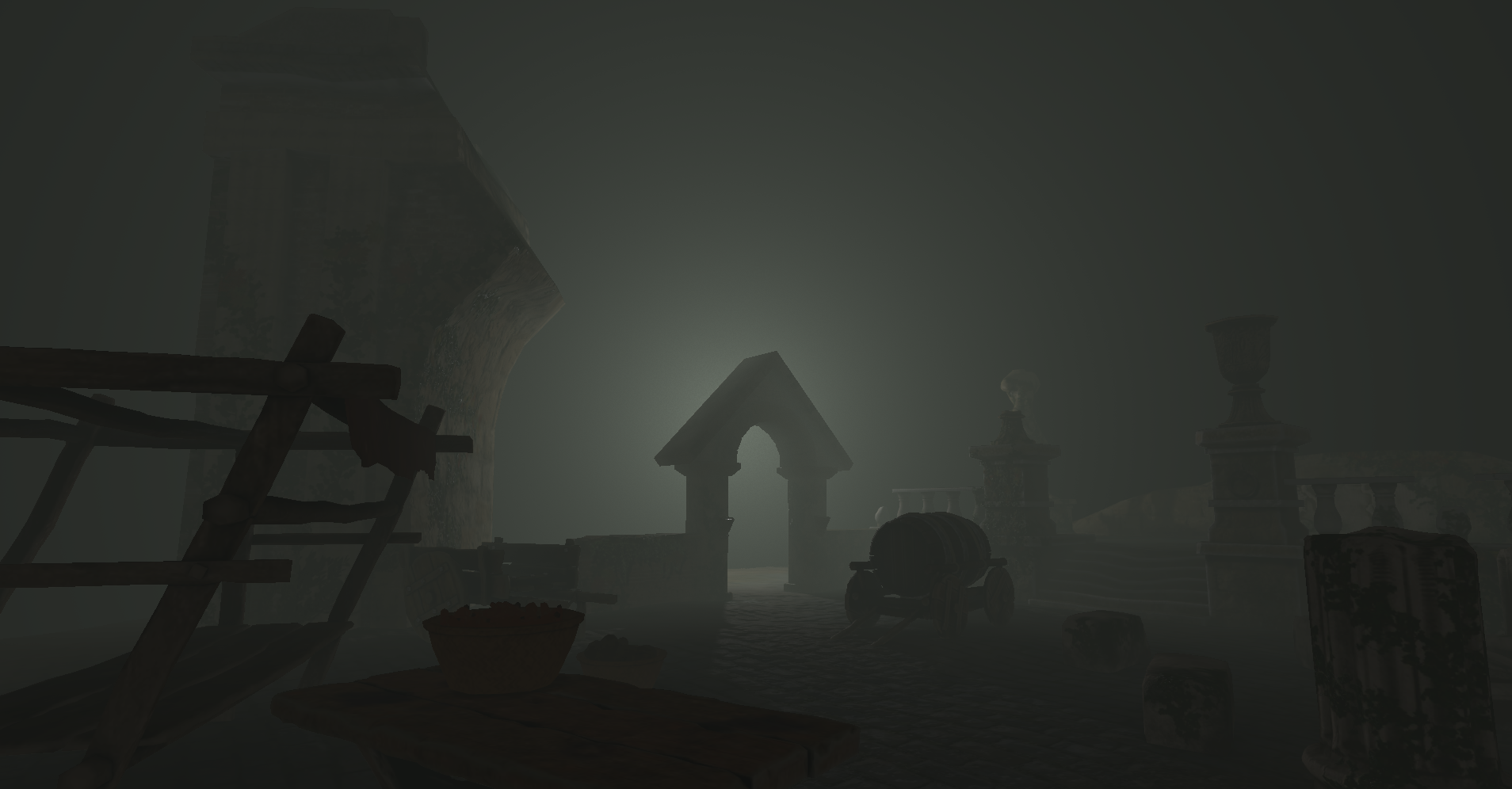

Fog is a graphical feature that is used in games to create atmosphere, depth, tension, and visual hierarchy.

There are many ways to achieve the look of fog, some more "faked" than others. Older or lower-budget titles

for example may use simple screen-space fog that looks more flat with a linear gradient, which simply fades

the scene to a gray or blue color. While this can work for some games, it tends to break immersion when

aiming for realism, as it's disconnected from the world's lighting and geometry. You've likely seen more

modern implementations of fog in games like Horizon Zero Dawn (also famous for its awesome-looking cloud

rendering), Death Stranding and Returnal, where fog interacts dynamically with the world's light and geometry.

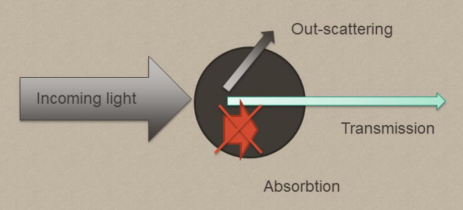

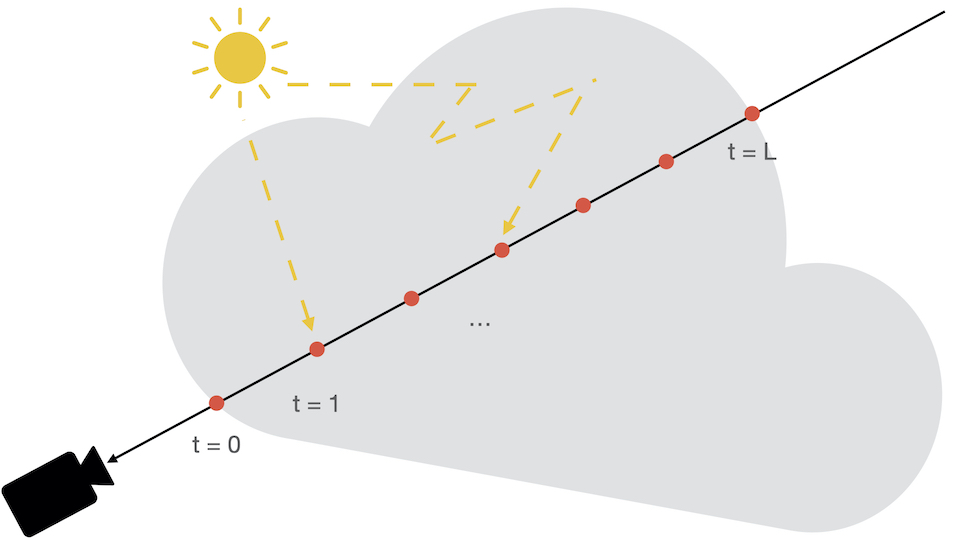

In these implementations, fog is treated as a 3D volume of participating media[1],

which is traced through using a technique called ray-marching to simulate light absorption, scattering,

soft shadows, and light shafts (also known as god rays).

At the time of writing this article, I'm studying game programming at BUas, where I was given the opportunity

to freely choose a topic for an 8-week self-study project. I had always been fascinated by cinematic looks

in games and wanted to delve deeper into a graphical feature related to this, which led me to choose to

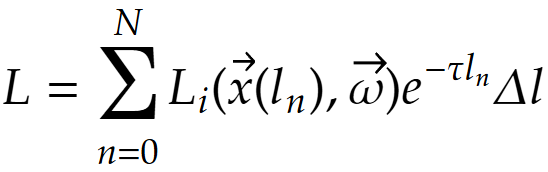

implement an implementation for ray-marched volumetric fog according to the Real-time Volumetric

Lighting in Participating Media paper written by Toth and Umenhoffer[3] (from now on referred to as [TU09]).